BRMs and Healthcare AI-Partnering for Success

Too often, healthcare professionals and healthcare IT divisions find themselves in conflict despite having nearly identical goals of improving patient care, reducing burden on providers, and providing care to patients at lower costs.

This is particularly evident in attempts to use artificial intelligence to provide better patient care and reduce burden on caregivers – but why? What makes AI different?

The cultural narrative around artificial intelligence in healthcare has created a terrifying dichotomy in which AI simultaneously will replace medical professionals by providing more care than humans, but also harm innumerable patients by providing worse care than humans and creating artificial barriers to care. Neither, of course, is strictly true, but these fears loom large in many discussions about AI implementation in healthcare. Additionally, the AI regulatory environment significantly lags the technology, leaving hospital systems to create governance criteria for themselves.

The technology’s capabilities and clinical needs are not always well matched, and the hype and cultural conversation around AI’s capabilities are often overblown. In this article, we will discuss some common challenges to implementing AI in healthcare and illustrate how the role of the Business Relationship Manager, or BRM, can smooth this path to implementation, through a hypothetical project idea and evaluation process.

But first, what IS the role of the Business Relationship Manager? Philosophically, the business relationship manager role is any role that leverages positive relationships to increase value in their organization. This role, often filled by a certified BRM, maintains relationships with key leaders on both the clinical and IT sides. One of the BRM’s main functionalities is to connect IT-related project ideas (and those that have them) with processes for evaluation and implementation resources. A good BRM will be a trusted advisor/strategic partner to their clinical leadership, and a respected voice for the clinical “customer” within IT.

The BRM role brings special expertise to these conversations since they are familiar with both the clinical workflows and the technical requirements of the IT division. They can speak fluidly with both providers and technical teams and help navigate implementation processes. A good BRM will advocate for responsible innovation, hold teams accountable to adhering to process, and ensure that the value proposed by new technology is realized.

The BRM role really shines in a situation where a clinician is bringing a technology solution for a clinical or operational problem.

Let’s follow a hypothetical example and say that a clinician has just returned from a conference where several vendors presented technical solutions for digital documentation support. The clinician is frustrated with the time spent responding to inbox messages from patients and caregivers and feels that these vendors may be able to help. Here, the BRM role gives a voice to a frustrated “consumer” of healthcare IT services and products and creates a formal space for idea discussion. The first action on the part of the BRM is to sit down with the clinician and create a document containing the entire problem and proposed solution.

Problem: Inbox messaging takes up too much time for the clinician

Solution: Implement one of the solutions from MDNoteFlow/ChartEase/MSGSync which will take 30 minutes and solve 100% of our problems.

In this not-exactly-complete document, we can see one of the challenges of AI implementation in healthcare. The stated problem is overly broad – how are we defining “too much time”? What is the right amount of time? What kind of messaging is in scope? The solution is vague. Several vendors are offering very similar products, with unrealistic expectations about implementation effort and value offered. Without a BRM involved, this idea might get rejected by IT out of hand as unrealistic.

The BRM’s job now is to flesh out both the description of the problem and the proposed solution with information that will help the IT division evaluate and prioritize the project. Expanding and interpreting an initial idea into a standardized framework is a large part of the BRM’s role in strategic partnership between IT and clinical divisions. This might take the form of additional requirements documentation, more description about the problem, estimates about the effort required to actually implement the solution, and proposed safety or financial impact. After this editing pass by the BRM, which often involves follow up meetings with the requestor and with impacted parties in the IT division, our request might look like this:

Problem: Inbox messaging takes up too much time for the clinician. Over the past five years, time spent responding to inbox messages from both patients and other providers has increased by 13%. Most of that increased time is spent outside of official work hours, resulting in increased “pajama time” which causes provider burnout and inefficient work. Many of the messages require similar responses, which a chatbot or other AI tool could draft for the provider to read, edit, and send. This would reduce provider time spent responding to messages.

Solution: Several vendor solutions exist in this space, as well as a native AI message draft tool within the electronic health record (EHR). Each of these should be evaluated for their ability to ingest message content and suggest appropriate responses. We should also consider the difficulty of implementing each of these solutions within our IT ecosystem and evaluate whether using the product as designed will reduce provider time spent responding to messages. We will need to estimate effort for training providers in how to use this technology, as well as effort for the reporting teams to develop dashboards to track success metrics. This request will require evaluation by our AI Governance Board.

The BRM has brought their clinical workflow knowledge to bear on the problem described, understanding that inbox messaging flows from providers as well as the patient, and clearly documenting the increase in pajama time as an issue for providers.

The BRM has also used their technical knowledge to remove the vendor’s promise that implementation will take 30 minutes and substituted instead several reality-based criteria for evaluation. Finally, the BRM has identified an additional governance group that will need to weigh in as this project is evaluated. With the assistance of the BRM, this healthcare AI project has avoided early common complications of poorly scoped problem, poorly defined value and early rejection by IT groups.

When the BRM communicates their efforts back to the requesting provider, the conversation shifts quickly to frustration with the process. “Why do we have to have all this extra information? I already gave you the solution,” says the provider. The BRM explains the intake and evaluation process and illustrates the value of a thorough evaluation prior to implementation.

Luckily, the BRM has contacts at another institution that has just paused their pilot of an AI message bot due to its inaccurate message suggestions. This anecdote helps underline the importance of the evaluation process to the provider. They’re not happy about it, but they understand. Although frustrations with process are not unique to AI projects, the novelty of artificial intelligence can complicate routine evaluations, as we’ll see in the next few steps of our hypothetical project evaluation.

Now that the project has a more detailed scope and proposed solution, the next step is to bring the proposed project to IT for evaluation. In this example, IT has regular evaluation meetings where all IT teams can ask questions, detail the work their team would need to perform to complete the project, give estimates of the time it would take, and approve moving the project forward. Getting this project on the schedule is no problem, and when this project comes up on the agenda, several of the teams have follow up questions and comments.

- Can we detail how the vendors will be accessing the information in provider’s messages?

- What additional information from the patient record will need to be accessed to generate a message back to the patient?

- How is the vendor storing information? For how long? Is the vendor training their language model on our data?

- What is the plan if the vendor experiences an outage?

- Are there documented APIs for integration?

- How is identity managed?

- Is the integration approach compatible with HL7, FHIR, or other existing communication protocols?

- What triggers the passage of information between the EHR and the vendor?

- How is additional information pulled?

- How often is information passed between the EHR and the vendor?

- What is the lag time between requesting a message draft and provision of the message draft?

- What size (how many providers, how many messages, how many weeks) is the proposed pilot, and are the vendors capable of scaling to enterprise volume?

- What kind of training are the vendors providing? What is the ongoing support model?

- What are the costs to implementation?

- Can we document the efficacy and equity of the tool?

- Does it deliver similar results for all patients/providers with all content? If not, can we limit the tool to the content that it does deliver well?

- How will we know if we are achieving our desired value?

- How will we measure reduced provider pajama time?

- What if patients or providers hate the messages and they require extended messaging?

- Does using AI to respond to messages affect the patients’ perspective of our medical expertise?

- How will we know if the message content is reliable?

- Does this place us at additional legal risk?

- Should we pilot AI in such a patient-facing space? Would a better strategy be to find an AI use case that is more “internal” such as billing or risk scoring?

- What legal agreements do we need to proceed with a pilot?

Some of these questions are routine for any technology implementation within a healthcare IT environment. However, some of these questions highlight ethical and philosophical concerns unique to AI. Questions about unintended consequences of large language models – “what if patients hate the messages and we actually spend MORE time messaging” can be recast as metrics for evaluating pilots. Questions about strategy, such as “should we pilot AI in such a patient-facing space or in a more internal area” are worthy of further discussion outside the format of the project evaluation process.

The IT division agrees to defer a decision about whether to proceed with a pilot until the BRM brings back some additional information. The BRM will not be able to generate all this information on their own. Gathering this information requires assistance from technical teams, the clinician, the vendor, and an assist from a business analyst or project manager with standardizing documentation. This takes time, and during this information gathering process the BRM is keeping the requesting clinician informed about progress as well as providing regular updates to the IT division.

When the requesting clinician reaches out to the BRM to voice impatience with the process, the BRM knows that part of the delay is that the AI Governance Board is still being spun up and is not yet formally meeting. The regulatory environment for healthcare AI is still forming, leaving hospitals to create their own governance structures and criteria. Our hypothetical hospital is doing well – they’ve seen the need for a governance board, and identified a few people to be on it, but the governance process is not yet formalized, another common problem in healthcare AI.

The BRM brings the clinician directly to the proposed members of the AI Governance Board to advocate directly for their project. The clinician and BRM co-present a few slides clearly describing the problem and the proposed solution. The clinician’s emotional description of the time spent responding to messages, paired with the BRM’s neatly-written documentation of the proposed pilots to evaluate an AI tool to help providers avoid burn out, resonates well with the Board.

They decide to approve the project and ask that the clinician come back to present interim results from the pilots once the Board is formally meeting. The BRM is asked to provide a list of evaluation criteria for the pilots so that any similar cases that come before the Board can be measured by standard metrics. In this case, the BRM has used their relationship skills to avoid a long delay while a governance board is formalized and helped create standard evaluation criteria.

After this discussion, and the additional information provided by the BRM, the IT division approves moving forward with the pilots. Contracts are drafted, success metrics set, and the pilots begin. The BRM and requesting clinician stay involved throughout the process, ensuring that standard metrics are used for each pilot, and that the clinician’s desired result – less time spent composing messages – remains the goal. When the pilots end, the BRM brings the requestor and IT division back together to jointly give opinions on which pilots should continue, terminate, or expand. Finally, reporting metrics are handed off to the appropriate support team, and the BRM continues to confirm that the implemented solution is performing as expected.

Without a trusted partner to bridge gaps in IT processes and advocate for incoming ideas, projects and innovations can stall, especially innovations involving novel new technology such as artificial intelligence.

AI can overpromise results and is often seen as a universal solution for problems in healthcare IT. Those problems must be carefully defined if real value is to come from implementing a new technology. A new AI solution for healthcare should undergo scoping and requirements documentation so that it can be evaluated and prioritized by both IT and the clinical area.

The BRM role brings essential knowledge and relationships to this documentation, evaluation, and prioritization process. A good BRM will possess strong clinical workflow knowledge – including strong enough relationships to facilitate shadowing clinical workflows by IT team members and to generate powerful narratives to advocate for innovation. They will also have basic technical knowledge, including relationships with other institutions that have implemented similar technology.

A BRM role will bring an ability to bridge communication gaps and hold both sides accountable to the evaluation processes, including governance evaluation, success metrics, follow ups, and post go live evaluations. With a good BRM, the road to innovative, safe AI in healthcare is much smoother.

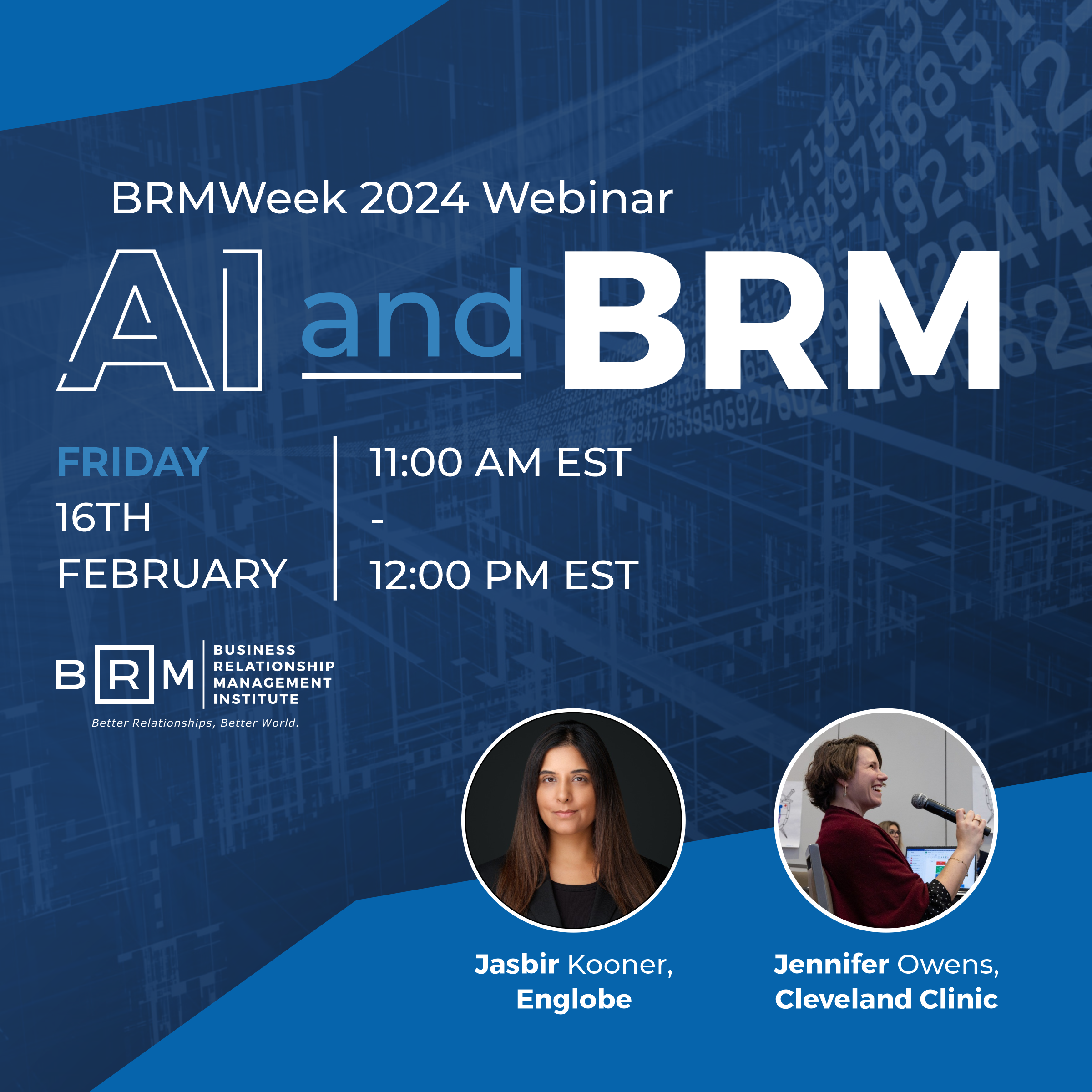

Attend the upcoming BRMWeek webinar on AI and BRM to hear more from Jennifer and other BRMs who are passionate about Generative AI.

February 16, 2024 11:00 AM- 12:00 PM EST

Register for this free webinar today!

About the Author

Jennifer Owens has been working in healthcare and life sciences since 2007. Over the past few years working in healthcare IT, Jennifer has become passionate about healthcare data, ethics and business relationship management. With a desire to improve healthcare and the lives of individuals, Jennifer continues to make an impact as a Senior IT BRM at Cleveland Clinic.